Nov 2025: The Risk Your Workforce Poses

November 2025

The state of AI at work in Ireland - November 2025

AI adoption is accelerating. Capability, governance, and preparedness are not keeping pace.

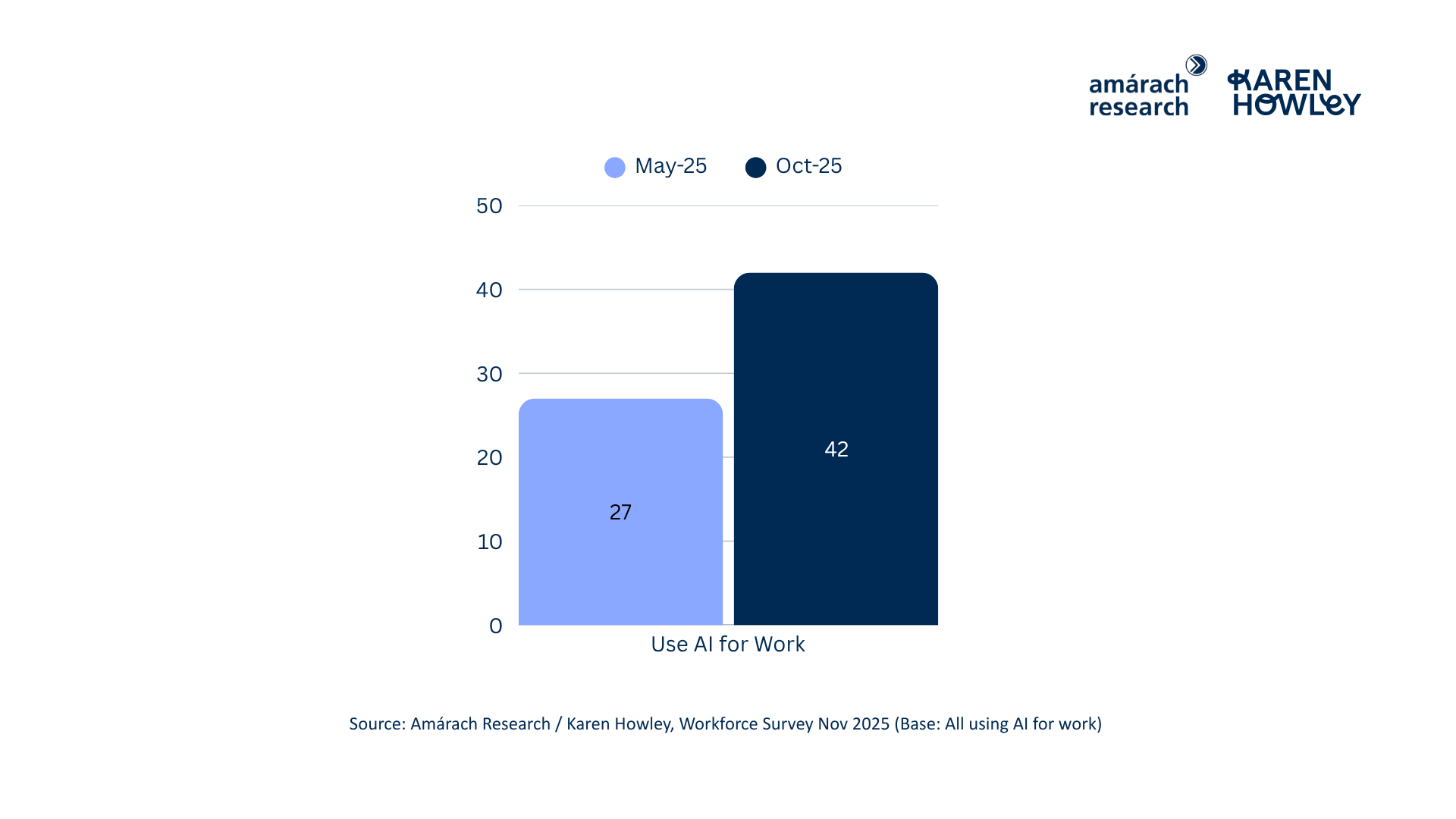

AI use in the Irish workforce continues to rise. Forty-two per cent of full and part-time employees now use AI for work, which is a 15-point increase since May. Adoption is strongest in larger organisations (47%) compared with 40% in SMEs, perhaps reflecting the structural advantage of scale, access, and dedicated IT support.

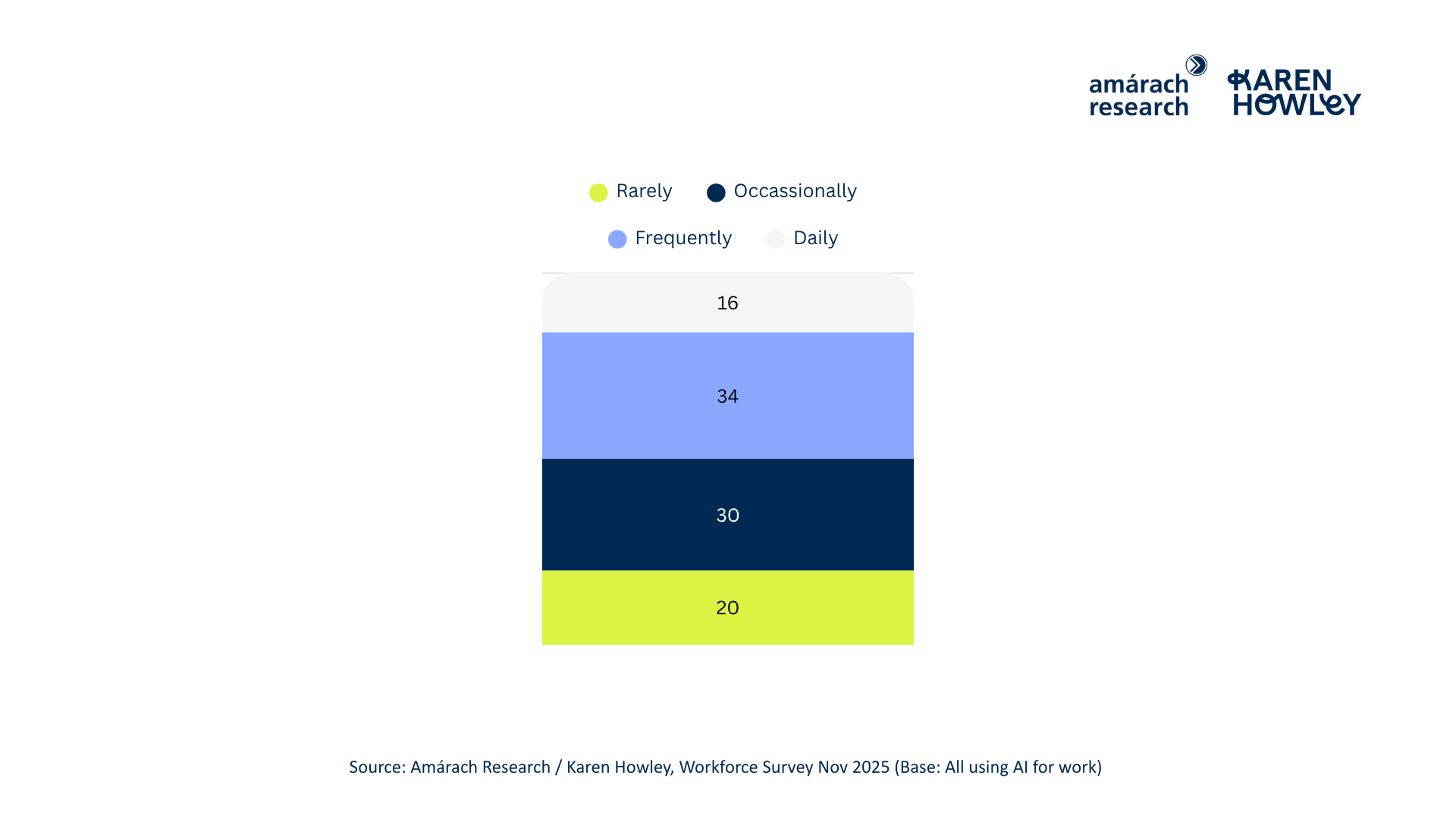

AI use is growing fast, but true adoption remains limited. Only 16% use it daily, while half engage just a few times a week or less.

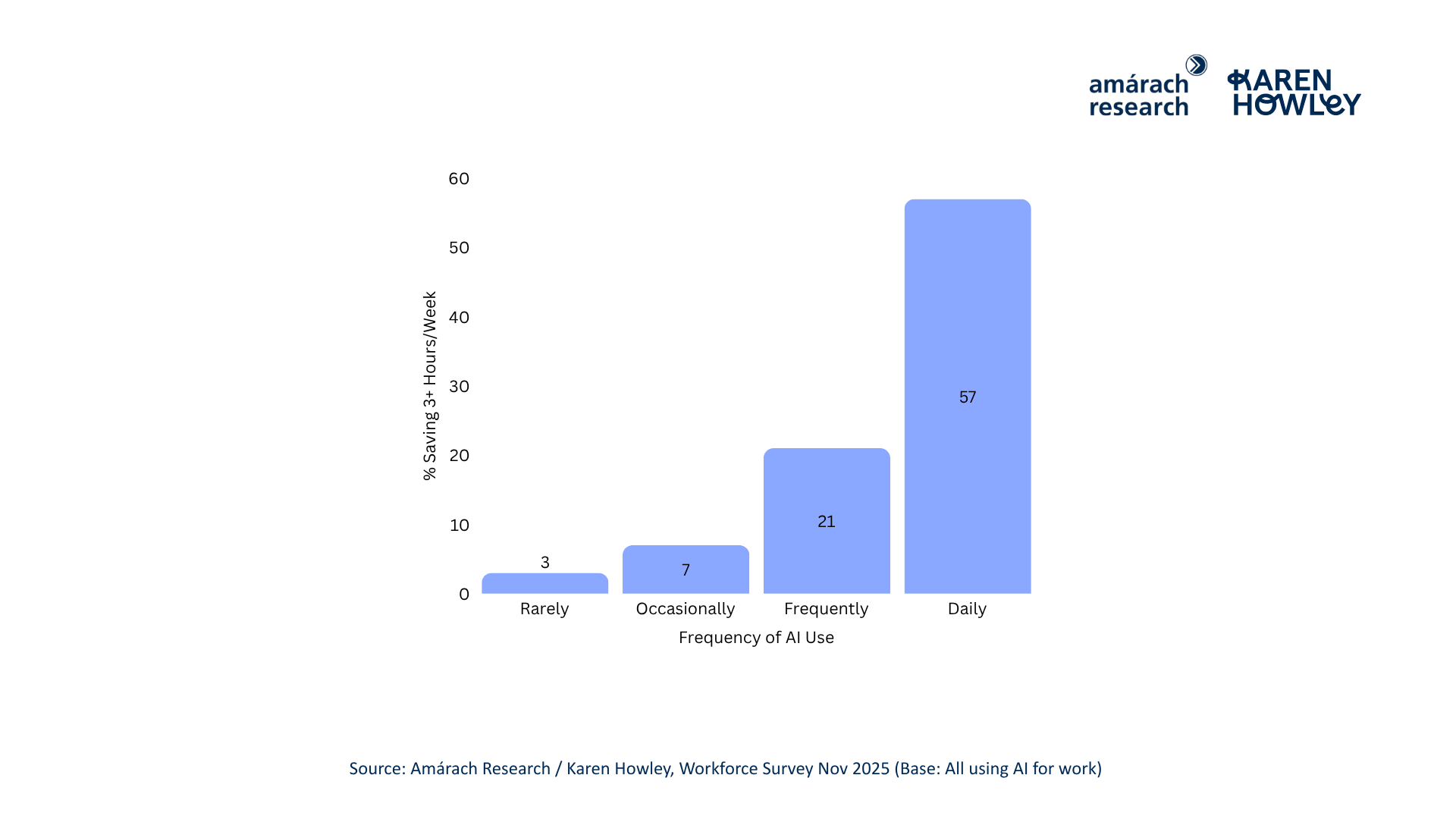

Frequency matters: regular users are six times more likely to save three or more hours a week than occasional users.

We see a clear pattern emerging in the data - learning drives use. Almost three-quarters of frequent users have received some form of AI training or informal learning, compared with just 58% of infrequent users. Among those with no training (33%), 42% use AI rarely.

Continuous learning is the key to driving frequency of use. Finding time for hands-on learning and experimentation at least once a quarter appears to be the sweet spot. 58% of the workforce falls short of that cadence, which stands in sharp contrast to the pace of technological change. AI evolves faster than almost any technology before it. Without continuous learning, skills fall behind the systems the workforce are meant to use and govern.

Despite this gap, expectations are rising. Over a third of employees (37%) believe AI will take over the most repetitive 20% of their work within a year - a belief strongest among younger workers and frequent users. The optimism is clear, but the readiness to capture it is not.

The Tools People Use and the Risks They Don’t See

AI adoption outpaced governance. Now, organisations must catch up or face escalating risk.

Three in four employees using AI for work (75%) have used consumer-grade tools such as the free versions of ChatGPT, Gemini, or Claude for work tasks. Most began experimenting long before policies, licenses, or training were in place.

Consumer-grade tools bring serious exposure:

Business data entered in prompts may train future models unless privacy settings are manually changed.

Security controls such as multi-factor authentication are not enforced at organizational level

Licences and user permissions cannot be centrally managed.

GDPR compliance is not guaranteed.

New features launch without internal risk review.

Copilot is the most common enterprise platform, with half of AI users reporting access through either Copilot Chat (23%) or Copilot for Microsoft 365 Premium (27%). Smaller groups report paid access to ChatGPT (19%), Gemini (10%), Claude (6%), Grok (5%), or Perplexity (4%).

But paid does not always mean secure. For example, a ChatGPT Business plan offers enterprise protections that the consumer-focused ChatGPT Plus plan does not.

This pattern reveals a clear governance gap between where employees are experimenting and where organisations are investing. The business case for managed team plans is still being built but the risk is already here.

Governance and Responsibility in the Age of Workplace AI

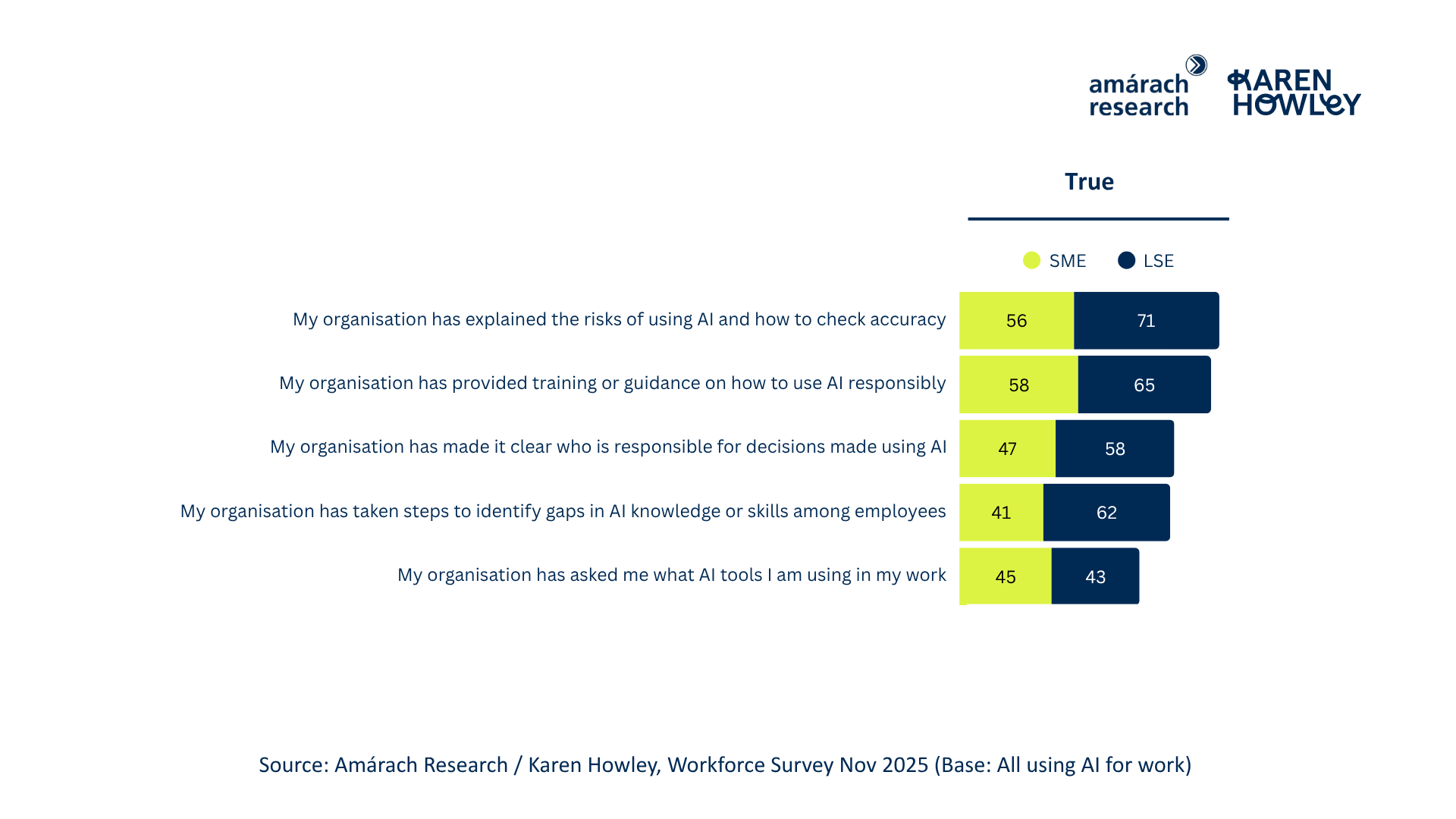

Larger organisations show stronger governance maturity, but visibility gaps persist across the workforce.

Sixty-one percent report their employer has explained AI risks and how to check accuracy, yet only 41% say anyone has asked what tools they are using.

Larger organisations appear further ahead, but not by much.

Risk and accuracy explained: 71% in large organisations vs 56% in SMEs

Guidance on responsible use: 65% vs 58%

Accountability for AI-assisted decisions: 58% vs 47%

Steps to identify skills gaps: 62% vs 41%

Asked what tools staff use: 43% in large organisations and 45% in SMEs

These figures show progress, but also complacency. Even in large employers, nearly three in ten workers say AI risks have not been explained, and over a third have no clarity on accountability. Governance maturity is emerging, but not embedded.

For leaders, this is a warning sign. Governance needs to move beyond policy documents that few read and become a management habit, creating space for practical conversations about how the technologies capabilities should be used. Until that happens, even well-intentioned organisations will remain exposed.

Trust, Accuracy, and the Misinformation Challenge

AI literacy must now include discernment.

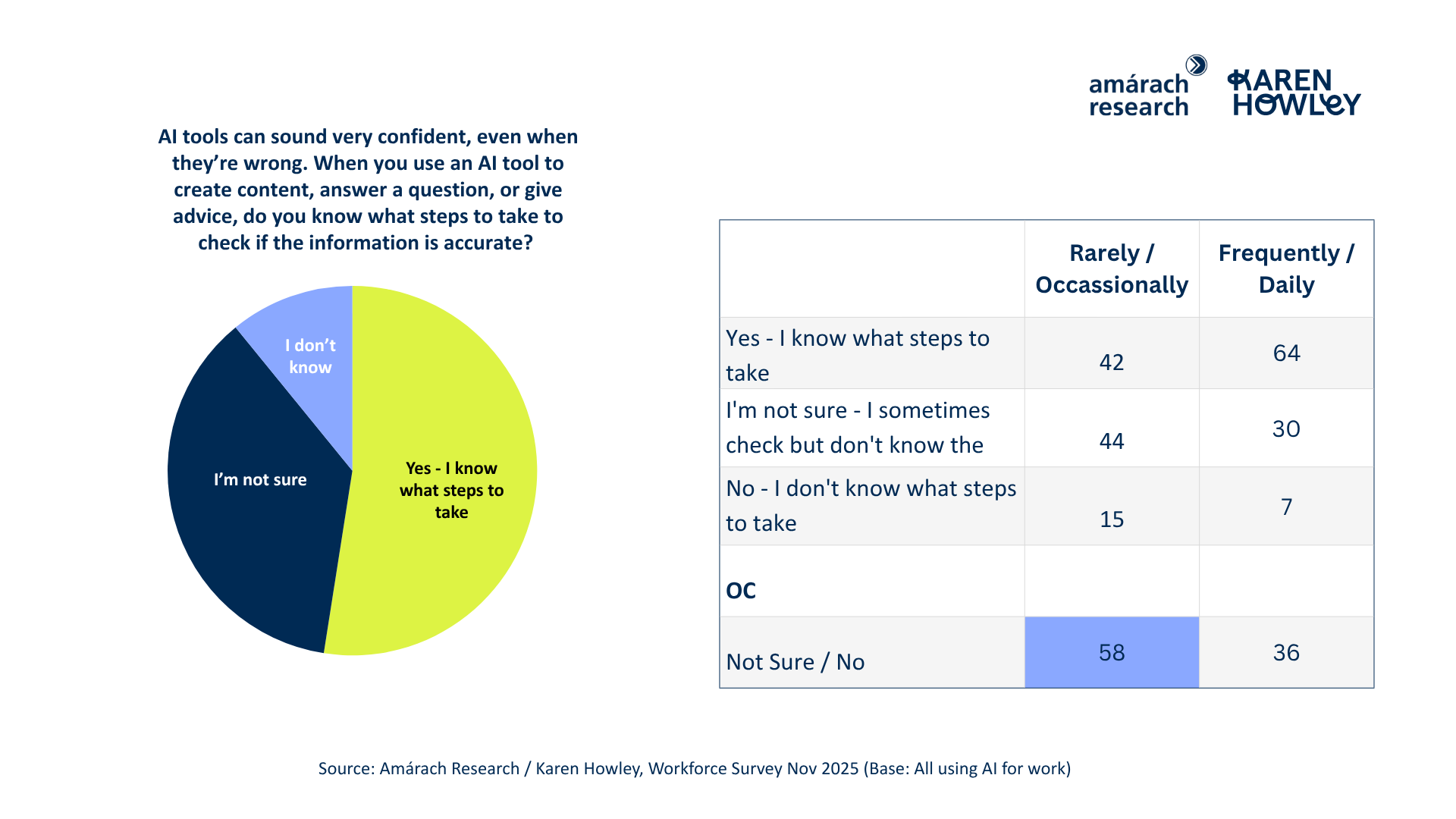

Just over half of those using AI say they know how to check if AI-generated information is accurate (53%). The rest are either unsure (37%) or admit they do not know what to do (11%). In practice, that means nearly half of those using AI at work lack the confidence to verify its outputs.

This is becoming a defining risk in an age of misinformation, where AI-generated text, images, and video can look entirely authentic. As one respondent put it, “It can be very hard to decipher what is AI these days.”

Frequent users are far more likely to know how to verify content than occasional users - evidence that experience builds discernment. But technical familiarity alone is not enough. Employees need the critical thinking skills to cross-check, question, and challenge AI outputs before they enter public or organisational circulation.

AI literacy is no longer just about using tools - it’s about knowing when not to trust them.

Hidden Risks in Everyday Use

Governance gaps rarely look like crises at first…they start as habits.

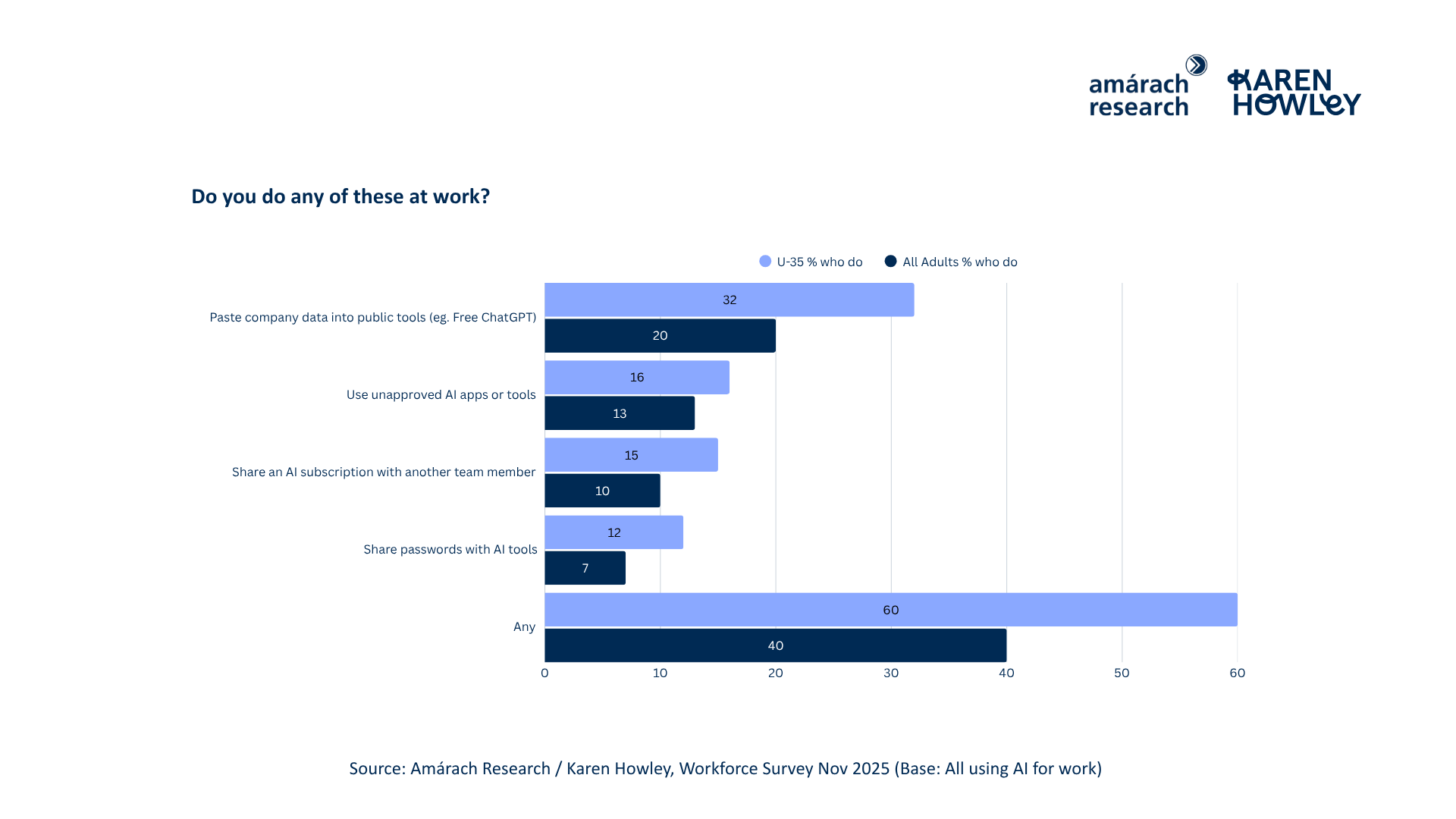

One in three employees using AI have pasted company data into public AI tools or used unapproved AI tools at work. Younger workers are most likely to engage in these practices, reflecting both confidence and complacency.

Pasting client data or internal text into public platforms can expose sensitive information to third parties or model training. Even shared logins, though seemingly harmless, undermine accountability and auditability - both essential for GDPR and EU AI Act compliance.

The answer is not restriction but responsibility. Organisations should define approved tools, explain why free versions pose risks, and train employees to recognise when a prompt or upload crosses a line. AI literacy includes the ability to pause before pressing enter.

Working in an Age of Synthetic Information

The problem is not that AI makes mistakes, it’s that people don’t always know how to spot them.

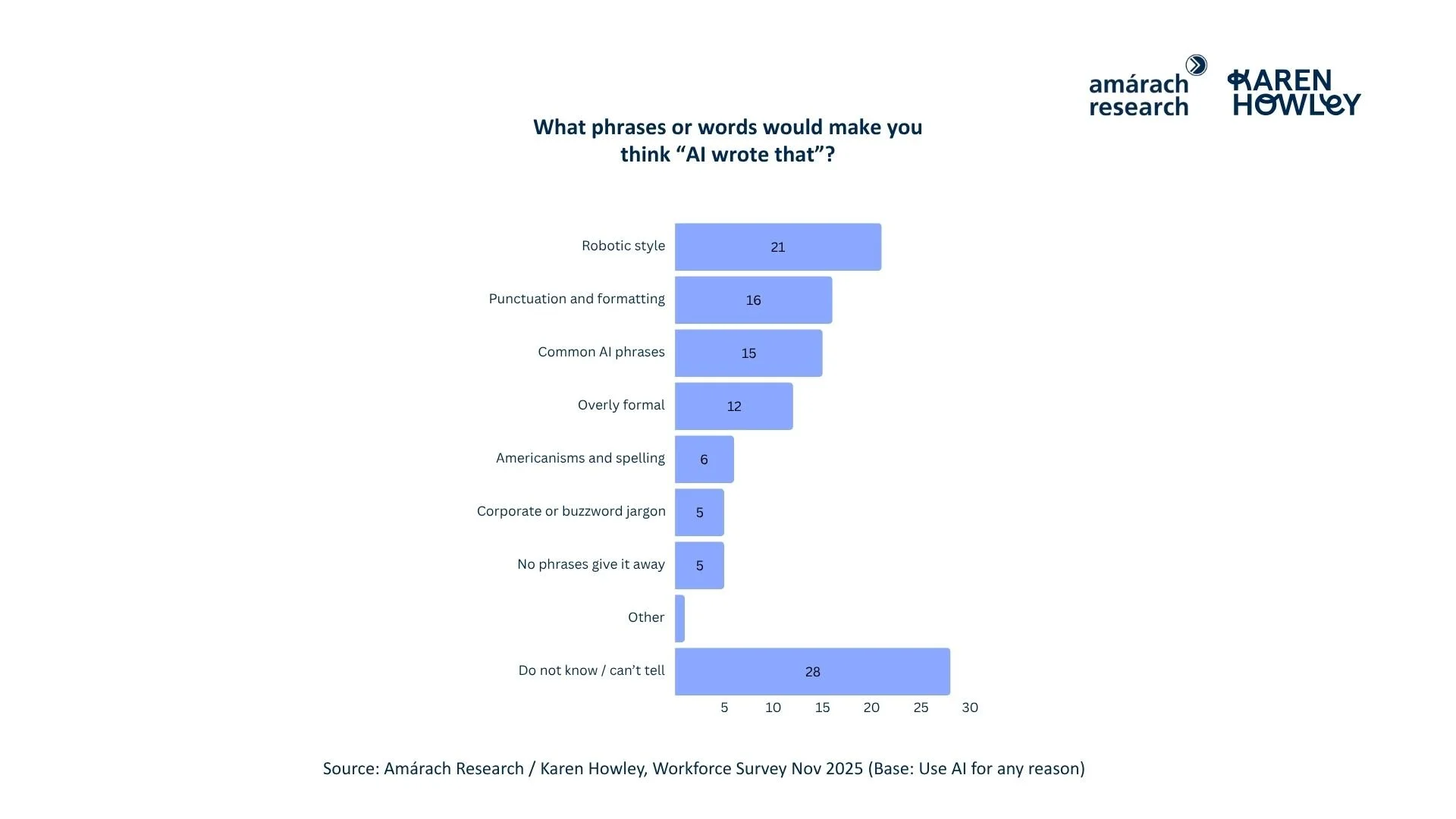

When asked what gives AI-generated text away, respondents pointed to style rather than substance. The most common clues were a robotic tone (21%), unusual punctuation or formatting (16%), and familiar AI chat phrases (15%).

Some described AI writing as “fancy, over-exaggerated sentences” or “try-hard and inauthentic.” Others noticed a lack of warmth or emotion:

“When the text feels robotic, with no personal touch.”

Certain phrases stood out as instant giveaways: “You are absolutely right,” “Generally speaking,” “Delve into,” “Leverage,” “Embark,” and “Good question.”

Others focused on punctuation quirks, especially the overuse of the em dash. As one respondent put it:

“The use of a dash that humans don’t use screams AI to me.”

Perhaps more alarmingly, nearly a third of those using AI for work (28%) say they cannot tell if something was written by AI or do not believe it is possible to know. This lack of discernment raises serious questions about editorial oversight, critical thinking, and judgement within an AI-assisted workforce.

Building AI literacy means equipping people to question and verify what they read, not simply to produce more of it. Vigilance is now a professional competence, and credibility depends on it.

Next Steps: Governance That Scales

You can’t realise ROI from AI until you can rely on your workforce to use it well. That starts with secure foundations.

Across adoption, productivity, training and trust, progress is real but inconsistent. Many organisations are now moving from everyday time savings to automation, a version of scaling that amplifies both value and risk. Without clear governance, visibility and capability, that scale will stall.

The next phase of AI integration is a leadership challenge, not a technical one. It depends less on new tools and more on the systems that keep them safe, compliant and valuable over time.

Four priorities stand out:

1. Gain visibility before you write policies.

You can’t govern what you can’t see. Mapping where, how and by whom AI is being used should come before any new policy or training plan. Without a clear view of real activity, governance remains theoretical.

2. Equip leaders to govern, not just comply.

Governance frameworks work best when leaders understand their roles in applying them. Building literacy on The EU AI Act, enterprise-grade platforms, accountability models and ethical risk ensures governance moves from a compliance exercise to a management capability.

3. Embed governance into the operating model.

Governance cannot live in policy documents; it must become part of daily management practice. Defining decision rights, oversight structures and communication rhythms ensures AI use is consistent, compliant and aligned with business goals.

4. Align AI strategy and governance.

Strategy determines where AI creates value; governance determines how that value is delivered safely. The two must evolve together. Structured assessment helps leaders prioritise high-impact, high-risk initiatives and build governance proportional to business value.

Organisations that secure their foundations today will be the ones that can automate confidently, stay compliant and turn AI into measurable return tomorrow.

Methodology

This report draws on the same nationally representative survey of 1,000 adults aged 18 and over in the Republic of Ireland, conducted online by Amárach Research between 8th - 13th Oct 2025. Within this national sample, 603 respondents were in full- or part-time employment. Among these workers, 64% reported using AI, while 42% said they use AI as part of their work.

Quotas were set and results weighted by age, gender, socio-economic group and region to reflect the national population. The margin of error for results based on the total sample is ±3.1% at the 95% confidence interval. The survey explored adoption patterns, governance maturity, and employee confidence in the responsible use of AI across different sectors and organisation sizes.